Over the past few weeks, French start-up Mistral has been making a lot of waves in the world of generative AI.

Founded in May 2023 by three engineers from Google Deepmind, Mistral has already raised 385 million euros in capital in six months and is currently valued at around 2 billion euros!

This unbridled growth is already positioning Mistral as a major player in the sector, and a serious competitor to OpenAI.

Mistral continues to develop its models and capabilities, and could well play a key role in the future development of European AI. The company’ s open-source approach and ethical commitment align with the preferences of many European companies.

Mistral’s success is underpinned by the existence of a French AI ecosystem that is going from strength to strength. In September, Xavier Niel announced strategic investments in AI estimated at around 200 million euros. Part of this investment is earmarked for the purchase of GPUs from Nvidia, in order to provide the cloud services company Scaleway with the computing power it needs for AI, and make it available to European start-ups.

But money and computing power aren’t everything: a concentration of talent is also required. In November, the creation of Kyutai was announced, a new AI research laboratory based in Paris and benefiting from 300 million euros of investment, headed by AI hotshots from Google and Meta. In fact, Google Deepmind and Meta’s research labs in the region represent a pool of talent that will be able to irrigate new companies.

For its part, Station F, one of the world’s largest incubators for technology start-ups, is also in Paris, supporting the first steps of start-ups and playing a unifying role, notably through events such as AI-Pulse.

All this means that France is starting to seriously compete with Great Britain, until now the dominant player in European AI.

It’s worth mentioning this positive development: we often hear of European start-ups moving to the USA when their capital requirements increase, but the reverse is also beginning to happen: the American company Poolside AI has decided to move to Paris, attracted in particular by less exorbitant salary costs than in the USA…

Available Mistral models

Let’s take a closer look at the models published by Mistral. There are three, called Mistral-7B, Mixtral-8x7B and Mistral-Medium, in order of increasing power.

- Mistral-7B converses only in English and has 7 billion parameters, making it locally executable on most current computers. This model is freely available as open-source.

- Mixtral-8x7B includes English, French, German, Italian and Spanish. Its architecture is called “expert mixture”. This model is also available as open-source, but given its size, only specialized machines can run it.

- Mistral-medium: this is an enhanced version of Mixtral-8x7B with the same basic architecture. Mistral claims that its performance is close to GPT-4 and that it excels in programming tasks. This model is not available as open-source, but requires access (for a fee) via the Mistral programming interface.

The Mixture of Experts architecture used by Mixtral-8x7B comprises 8 distinct groups of parameters, plus a supervisory block that activates only the two most relevant groups as they pass through each model layer, and then recombines them afterwards. This innovative approach means that the 46.7-billion-parameter model requires “only” the computing power of a 13-billion-parameter model. Generation is thus accelerated by a factor of around 3.5. It is suspected that GPT-4 – whose architecture has not been published – also uses a model of this type, with rumors of 8 experts, each with 220 billion parameters (i.e. 1.7 trillion parameters in total).

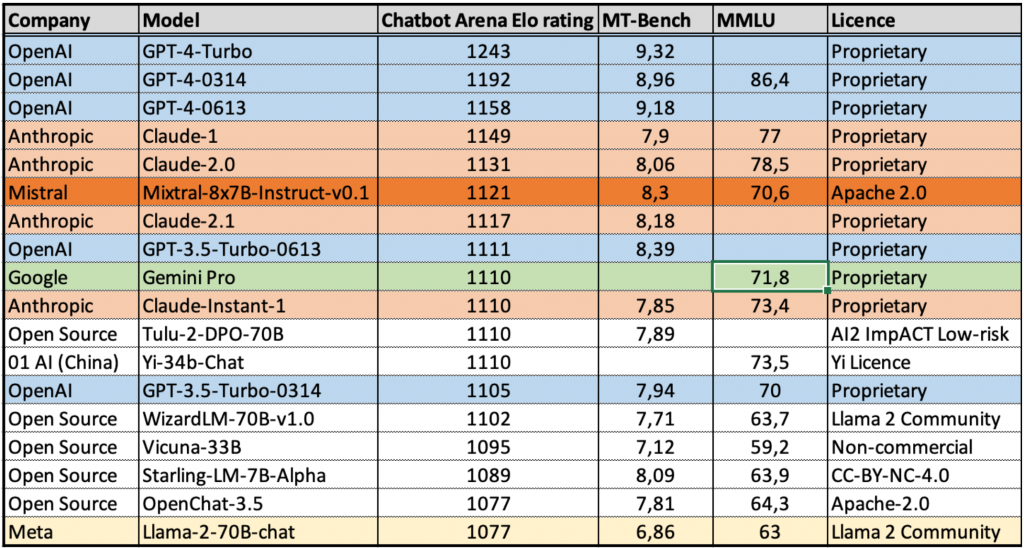

A large part of the Mistral craze stems from the performance of these models in relation to their size. You can see that Mixtral-8x7B is very well positioned in the HuggingFace ranking:

I’ll explain how to run templates locally in a future article, but in the meantime, you can try out Mistral’s three templates on Perplexity.ai’s web interface, accessible here.

All you have to do is choose the template you want from the drop-down menu in the bottom right-hand corner (which also allows you to choose other templates; names beginning with pplx correspond to those developed by Perplexity.ai).

Beware that your data are not confidential on Perplixity.ai’s free version. Mistral models are safely accessible from AIdoes.eu, amongst many others and with useful additional functionality.

Translated with DeepL and adapted from our partner Arnaud Stevins’ blog (Dec 25th, 2023).

Leave a Reply